Fan-Out Queries

Don't optimize for prompts - optimize for how LLMs actually search the web.

Spotlight captures the search queries AI models use to find information, then curates content that targets those keywords.

Optimize for How LLMs Actually Search

Real Search Behavior

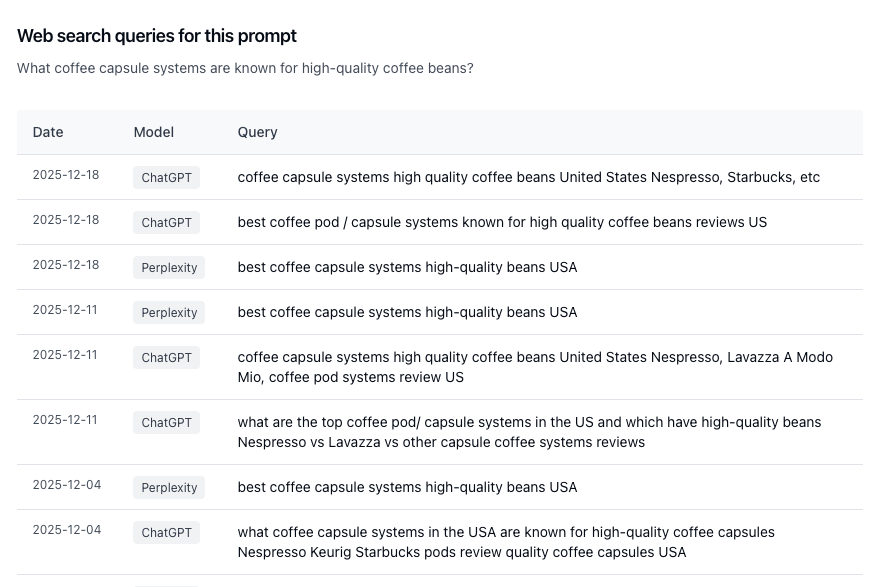

See the actual sub-queries LLMs use when searching the web. Don't guess—know exactly what they're looking for.

Strategic Optimization

Instead of optimizing for user prompts, optimize for the fan-out queries LLMs use to find information. This is where visibility actually happens.

Content Recommendations

Get specific content suggestions optimized for fan-out queries, ensuring your content appears when LLMs search the web for fresh data.

Temporal Insights

Track how fan-out queries evolve over time for each prompt, revealing how LLMs adapt their search strategies.

How Do Fan-Out Queries Work?

Query fan-out is an AI search system process where LLMs split a user's query into multiple sub-queries, collect information for each, then merge the results into a single response. Spotlight captures and analyzes these fan-out queries to reveal how AI models actually search.

Query decomposition

When AI models like ChatGPT and Google AI Mode need fresh information, they break down your prompt into multiple sub-queries. A single user question becomes several targeted searches.

Query capture

Spotlight captures every fan-out query that LLMs generate when searching the web for fresh data sources. We see the exact search terms they use, not just the original prompt.

Analysis and insights

We analyze all fan-out queries used over time for each prompt, providing insight into how LLMs change their search approach. You see the evolution of their search strategy.

Content optimization

Based on the fan-out queries, Spotlight recommends content optimized for how LLMs actually search. Instead of optimizing for the prompt, optimize for the queries LLMs use to find answers.

Why Optimize for Fan-Out Queries?

The gap between what users ask and what LLMs search for is where visibility happens.

Direct Alignment

Your content matches the exact queries LLMs use when searching, not just the original user prompt.

Fresh Data Focus

Fan-out queries reveal when LLMs search for fresh data, helping you target content that appears in real-time searches.

Search Strategy

Understand how LLMs decompose complex questions into simpler searches, then optimize for those patterns.

Temporal Tracking

See how fan-out queries evolve over time, revealing shifts in how LLMs approach the same prompts.

Competitive Edge

Most brands optimize for prompts. You'll optimize for the actual search queries that drive visibility.

Actionable Insights

Get specific content recommendations based on real fan-out queries, not theoretical optimization strategies.

Frequently Asked Questions

What are fan-out queries?

Fan-out queries are sub-queries that AI search systems generate when they break down a user's prompt into multiple searches. Instead of searching for the original prompt directly, LLMs decompose it into several targeted queries, collect information for each, then merge the results into a single response.

Which AI models use fan-out queries?

AI search systems like Google AI Mode and ChatGPT use query fan-out to improve response quality. When these models need fresh information from the web (rather than relying on their training data), they generate multiple sub-queries to gather comprehensive information.

Why should I optimize for fan-out queries instead of prompts?

When LLMs search the web for fresh data, they don't search for the original user prompt—they search for the fan-out queries they've generated. Optimizing for these actual search queries ensures your content appears when LLMs are actively searching, not just when users ask questions.

Are fan-out queries available for all prompts?

No. Fan-out queries are only available for ChatGPT and some other LLMs when they search the web for fresh data sources—meaning they don't answer from their training set. If an LLM answers from its training data, no fan-out queries are generated.

How do I see fan-out queries in Spotlight?

Spotlight shows all fan-out queries that were used over time for each prompt in your dashboard. You can see the evolution of how LLMs search for information, track changes in their search strategy, and get content recommendations optimized for these queries.

What kind of content recommendations do I get?

Based on the fan-out queries captured, Spotlight recommends content that is optimized to appear when LLMs search the web. These recommendations are specifically tailored to match the sub-queries that AI models actually use, ensuring maximum visibility during their search process.

Optimize for How LLMs Actually Search

Don't optimize for prompts. Optimize for the fan-out queries that drive real visibility when AI models search the web.